If you are a tech enthusiast and want to know about the most talked-about technology, AI and related technology, then you should scroll down and spend some time on this post. We focus more on the iPhone and its ecosystem here and will cover other neural engines in a different post.

What is common between the world’s tallest twin tower and the iPhone 13?

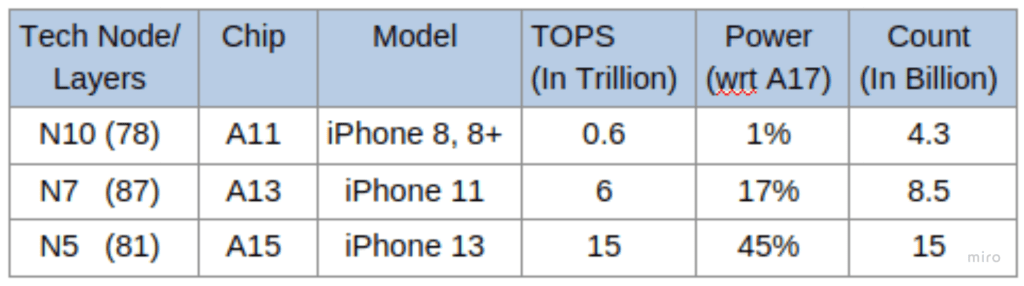

- The iPhone 13 was launched with an A15 SoC that contains 6 CPUs, 5 GPUs and 16 NPUs (Which is introduced in iPhone 11 on 2017), which perform 15 TOPS (trillion operations/second)

- The number of layers in N5 (the tech node in which A15 SoC is built) (~81 layers) is close to the number of floors in the world’s tallest twin tower (88).

- The A15 SoC transistor count is more than the world’s most populous country (India), i.e., 15 billion.

- The latest models come with a higher transistor count for better performance in operation, a longer battery life, and the latest tech like ML and AI enabled.

- A15 SoC is manufactured by TSMC Fab 18, which uses the latest ASML lithography machine, an EUV equipment, to make layers counted below 100 with more transistor density.

- Main Companies: ASML, TSMC, ARM, Apple and others.

What are the different processing units in the A15 and later SoCs?

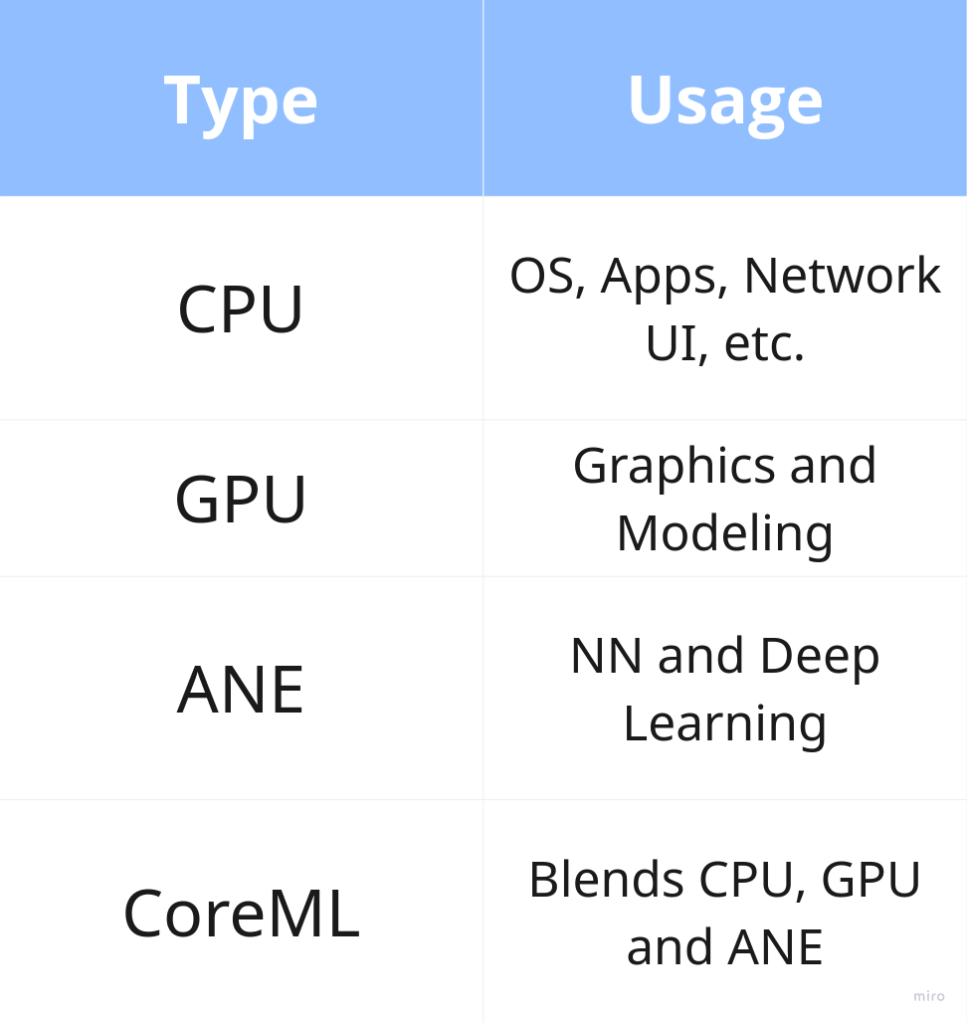

Apple SoCs mainly contain CPUs, GPUs, NPUs, image processors, and other processing units. Each has a dedicated task to do; otherwise, the phone starts to act weirdly like the battery drains out quickly and heats up.

What are the different AI and related applications present on the iPhone?

Consciously or unconsciously, AI and related applications are part of our lives via smart mobile phones. Here we are listing some of them:

- Search for text, scenery or location on Pics using text or voice command.

- Tap/click on text in a photo to copy, translate or search it.

- Long press on an object in a photo to remove it from the background and many more.

- Accepting voice input as Siri and processing.

- Using Face ID.

- Summarise the email and create a reply for the same. Create a summary of notifications.

- Creating Animoji.

- Real-time caption generation on videos.

Do you know which domains need accuracy (or precision) over memory and vice versa?

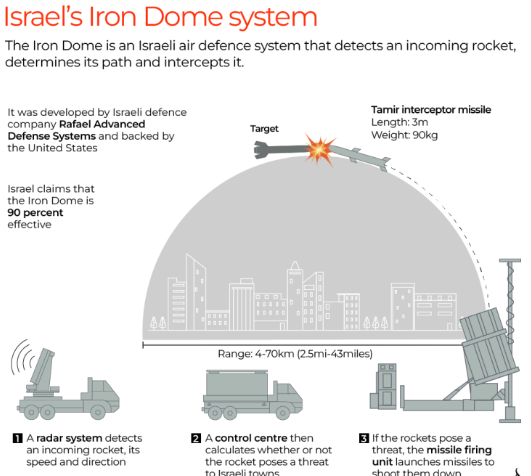

During the Vietnam War, sidewinder missiles made up of vacuum tubes missed more than 92% of their target, Thanh Hoa Bridge, while the latest Iron Dome can neutralise more than 90% of incoming targets. So, domains like defence systems, scientific computing and financial calculations, etc. prefer accuracy, while mobile devices & their applications prefer less memory, as it is not super critical.

Deep-dive into one AI related Application

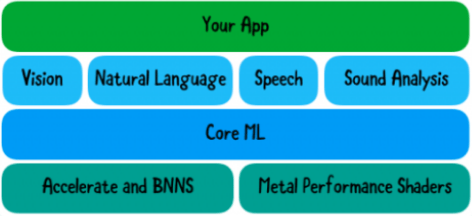

The above image search based on voice command in Pics involves four major steps and is processed fully on-device in real-time with low latency and offline.

- Speech-to-text using on-device speech recognition by Apple Neural Engine (ANE).

- Object Recognition: CoreML NLP models used for classification, like object-based, location-based, date-based, etc.

- Image Classification: On-device photo indexing after a click, using ANE deep learning. Like object, scene, text, face, etc.

- The system matches the query result (in step two) with indexed content (in step three) using ML and shows the result.

The CPU and GPU are preferred for non-ML, while the NPU (aka ANE) is preferred for ML and DL-related compute-intensive tasks.

Why are neural engines on demand?

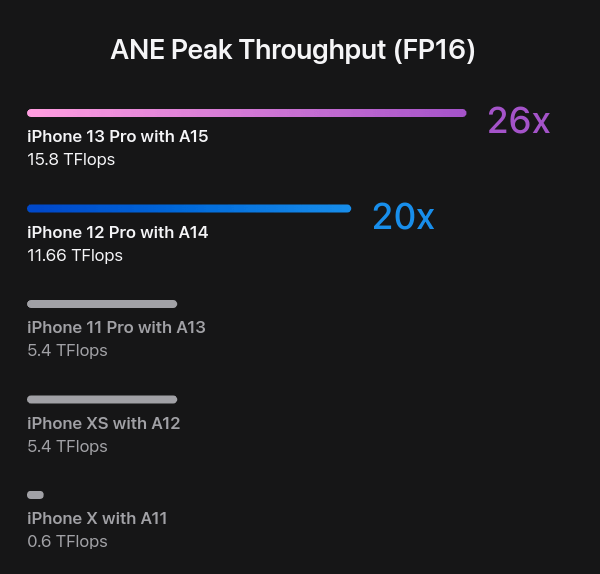

There was a time when CPUs (and related companies) ruled the semiconductor market. With the demand for parallel computing, GPUs (and new related companies) are leading in multiple domains like graphics, data centers, automated cars, AI, etc. From 2017, NPUs have been part of the SoCs, like the Apple Neural Engine (aka ANE). Its throughput has reached 27 times since then.

- Much faster than CPU & GPU.

- More energy efficient.

- Need less memory.

- Do math computations like NN, ML, DL, etc.

- Like GPUs without graphic processing.

Why is the ANE so efficient?

ANE uses mostly half-precision floating-point format (aka FP16) for its operations.

- FP16 has only 5 bits for the exponent with base 2 and 10 bits for the fraction, the value after the decimal point.

- Lower exponent -> lower range -> lower accuracy in comparison to FP32 and FP64.

- FP16 occupies less memory (2 bytes) compared to others.

- For 1 million parameters, FP16 uses only 2 MB, while FP32 uses 4MB.

- FP16 operations consume less power to do a large amount of calculations but don’t require a high level of precision.

FP16: High efficiency but low accuracy.

FP64: Low efficiency but high accuracy.

Computational Comparison on a Cloud Provider:

550 PFLOPS (for FP16) vs 37 PFLOPS (for FP64)

Do you know about the company which went against the tide to become the market leader in graphics design chip company and is worth more than $2 trillion? Here

Do you know the origin of distractions in our digital life? Then check out this Digital Distractions & It’s Origin.

Do you know why it is not good to multitask and the advantages of deep philosophy? Then spend your 5 mins to read key takeaways from the book Deep Work.

Do you know what lvalue and rvalue references are? Check this link for details.

Leave a reply to 10 Things about trip to Pisa-Florence, Italy – InvestOnSelf Cancel reply